Nikhil Chowdary Gutlapalli

Bio: Software Engineer developing embedded software for an automotive terahertz sensor. Focused on real-time point cloud processing, post-processing, and system reliability.

For any collaborations, feel free to reach out to me!

Education

Master of Science in Robotics

- Concentration: Electronics and Computer Engineering

- Relevant coursework: Robotics Sensing and Navigation, Mobile Robotics

Bachelor of Technology in Electronics and Communication Engineering

- Relevant coursework: Computer Programming, Information Technology Essentials, Computer System Architecture, Optimization Techniques.

- Awards/Honors: Aspiring Minds Motivational Award (AMMA) by the Dept of ECE.

Experience

ML Software Engineer

- Worked on deep learning projects such as computer vision, convolutional neural networks, and optimization techniques with various clients in the US.

- Preprocessed and analyzed large-scale datasets using Python and PyTorch.

- Made a breakthrough of AI in radar technology, which can replace the Angle beamforming (signal processing technique) with Neural Networks in a next-generation automotive radar.

- Worked with a Leading AI Semiconductor vendor on libraries that provide advanced quantization and compression techniques for trained neural network models and on TensorRT SDK.

Student Researcher

- Worked on the software stack for the robotics, which includes Auto-Navigation, Speech Recognition and Computer Vision. Specifically, focused on designing and developing the Computer Vision interface to assist the robotic arm and also while performing auto-navigation.

- Implemented Computer Vision algorithms to detect and recognize objects and obstacles in the robot's environment, allowing the robot to navigate safely and efficiently.

- Configured and implemented mapping, localization, and pose estimation algorithms using sensor fusion techniques for outdoor and indoor environments. Worked on integrating GPS, LIDAR, and IMU data to create accurate maps and determine the robot's location and orientation in real-time.

- Collaborated with cross-functional teams to deliver high-quality software products, including integrating the robot's software with hardware components such as motors and sensors.

- Contributed to research and development of new robotics technologies, including exploring machine learning and AI techniques for object recognition and autonomous decision-making.

Skills

- Python

- C++

- Deep Learning Techniques

- Machine Learning

- Gazebo

- Embedded Systems

- Computer Vision

- SLAM (Simultaneous Localization and Mapping)

- PyTorch

- Sensor Fusion

- TensorFlow

- ROS (Robot Operating System)

Projects

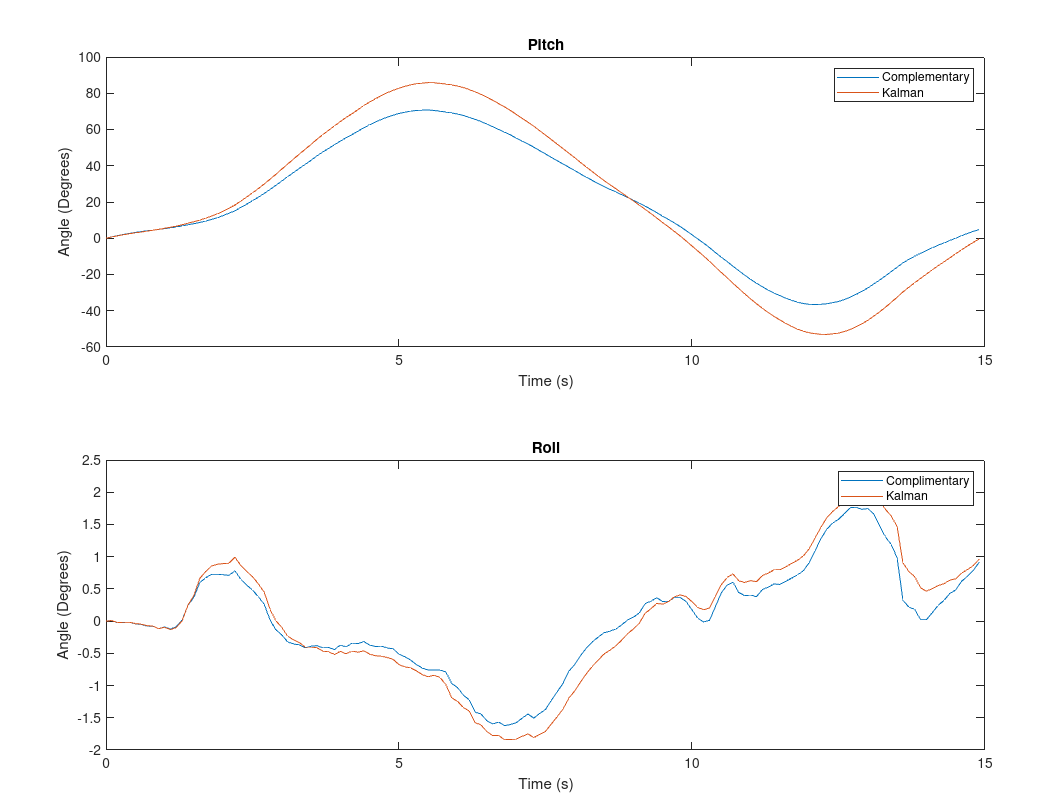

This project focuses on the development of a MATLAB-based Attitude Estimation system, designed to accurately determine the orientation of a mobile device using data from its accelerometer and gyroscope sensors. The code implements various estimation techniques, including accelerometer-only estimation, gyroscope-only estimation, a complementary filter, and a Kalman filter. It processes sensor data and provides estimates of roll, pitch, and yaw angles, enabling a comprehensive understanding of the device's orientation in 3D space.

Code

In this project, we harnessed the synergy of 2D Lidar, ROS, and Gazebo to pioneer 3D mapping. Our journey began with crafting a dynamic robot in SolidWorks, boasting a Lidar poised to scan from 0 to +60 degrees. A bespoke Lidar plugin orchestrated data collection, translating laser scans into intricate point clouds. Augmented by the octomap library, these clouds unfurled into vivid 3D maps. This venture not only showcases technical finesse but also exemplifies the potential of autonomous spatial understanding.

Code

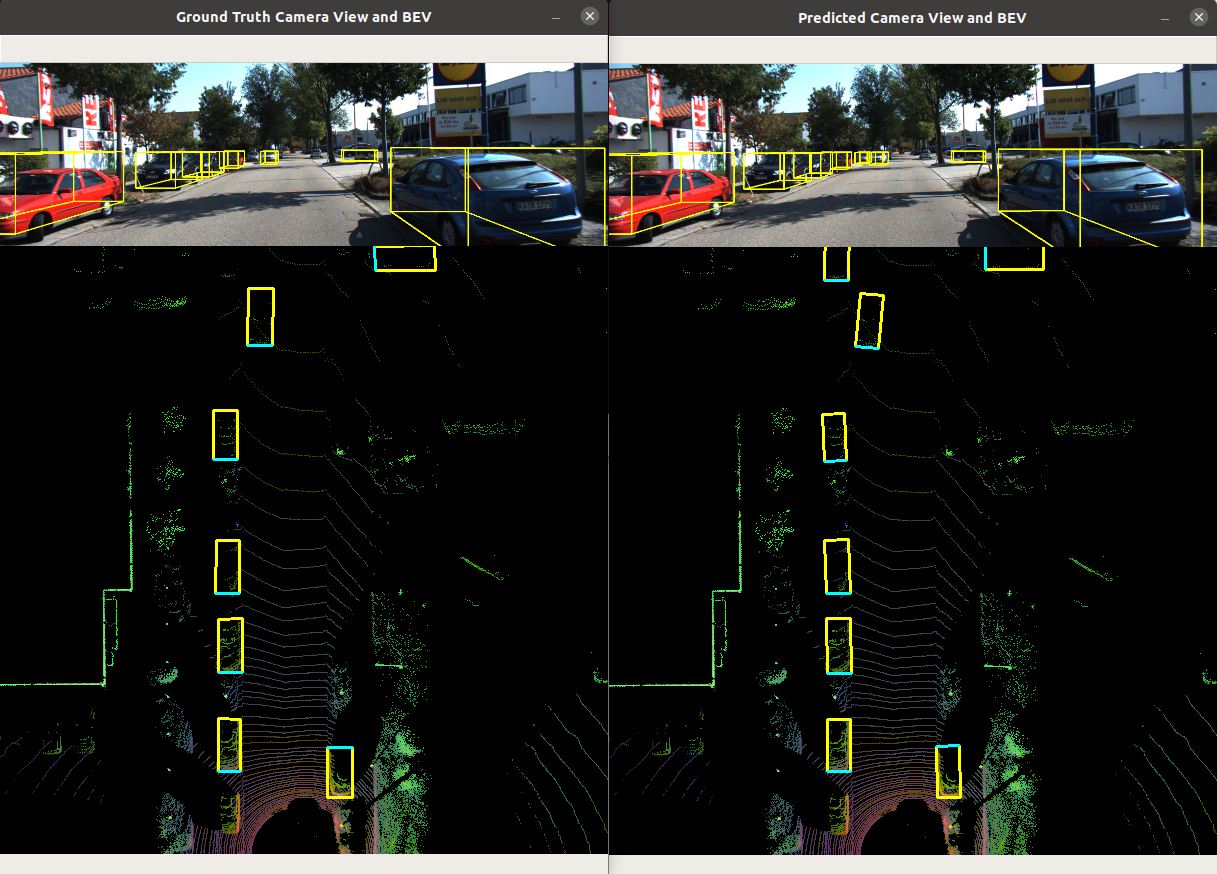

The Complex YOLO ROS 3D Object Detection project is an integration of the Complex YOLOv4 package into the ROS (Robot Operating System) platform, aimed at enhancing real-time perception capabilities for robotics applications. Using 3D object detection techniques based on Lidar data, the project enables robots and autonomous systems to accurately detect and localize objects in a 3D environment, crucial for safe navigation, obstacle avoidance, and intelligent decision-making.

Code

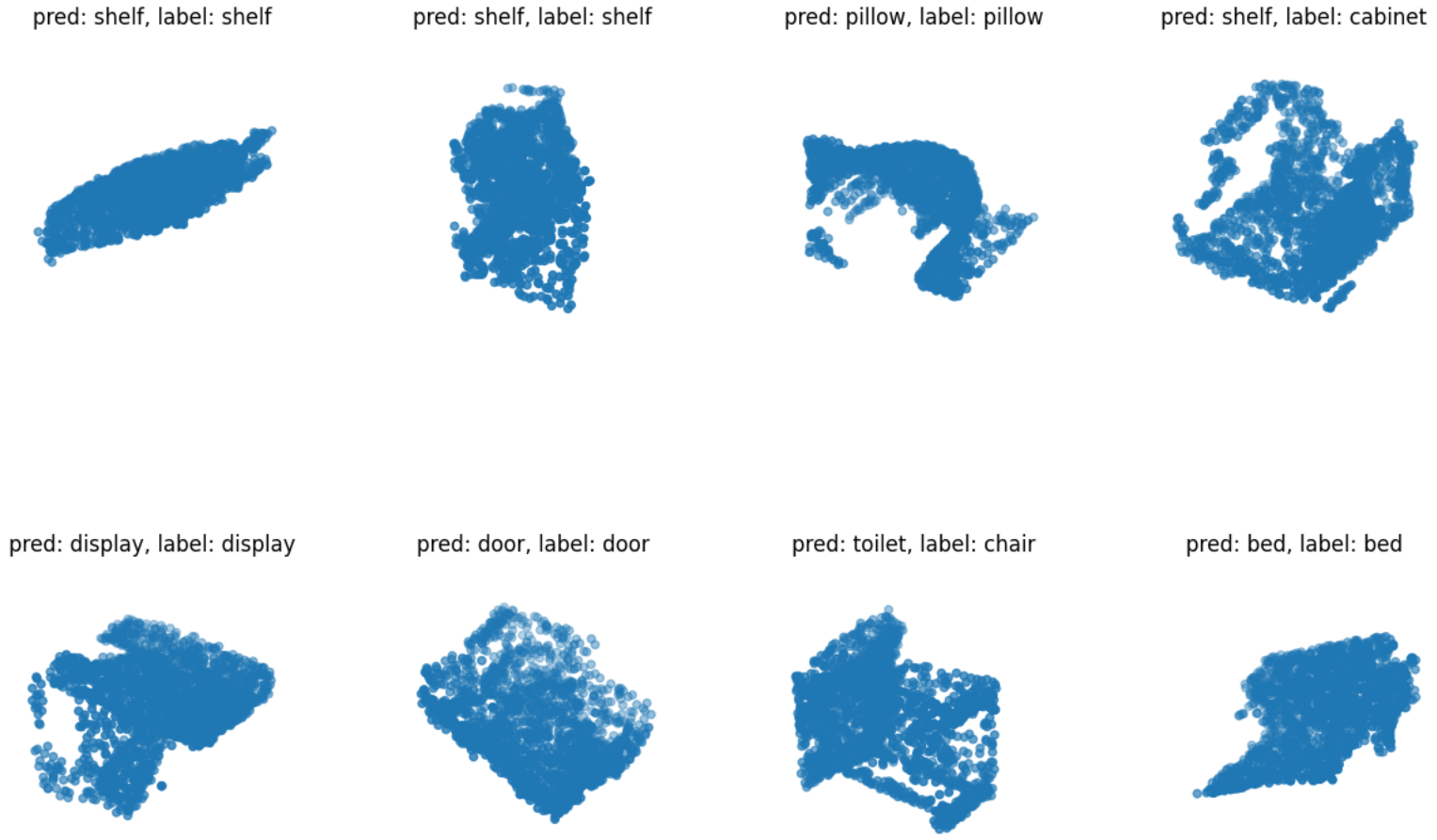

The Point Cloud Classification project implements the PointNet architecture for classifying 3D point clouds. It supports two datasets, ModelNet and ScanObjectNN, and provides functions for dataset preprocessing, model training, and visualization. The project aims to classify point clouds into different categories using deep learning techniques, enabling applications in object recognition, scene understanding, and robotics. The command-line interface allows easy configuration and training of the model.

Code

In my object detection project, I utilized the pre-trained YOLOv5 model from the Ultralytics repository to detect and classify emotions on human faces. Using the open-source software "labelme," I annotated images to create labeled datasets for training and validation. During the training phase, I fine-tuned the YOLOv5 model, optimizing its parameters to accurately identify "Happy" or "Neutral" expressions. Achieving high accuracy with the trained model, I then deployed the weights on an Android phone, developing a custom application for real-time emotion detection. This project showcases the practical application of object detection techniques for emotion recognition, with the ability to deploy such a solution on mobile devices.

Code

For my custom image classification project, I utilized a pretrained transformer model to achieve accurate results. By fine-tuning the model during the training phase and evaluating its performance during validation, I successfully adapted it to my specific image classification task. The pretrained transformer model's ability to capture intricate features and patterns in images proved highly effective in achieving high classification accuracy.

Code

Developed a novel approach to collaborative Simultaneous Localization and Mapping (SLAM) by utilizing a multi-robot system. The experiment employed two turtlebot3 robots operating in a simulated gazebo environment, with ROS serving as the platform for seamless integration. Leveraging the gmapping algorithm, we achieved highly precise mapping of the environment. Additionally, we equipped each robot with autonomous exploration capabilities using explore lite. The maps generated by the robots were skillfully merged using the multirobot map merger, resulting in a comprehensive and detailed representation of the environment.

Project Report / Code / Demo Video / Slides

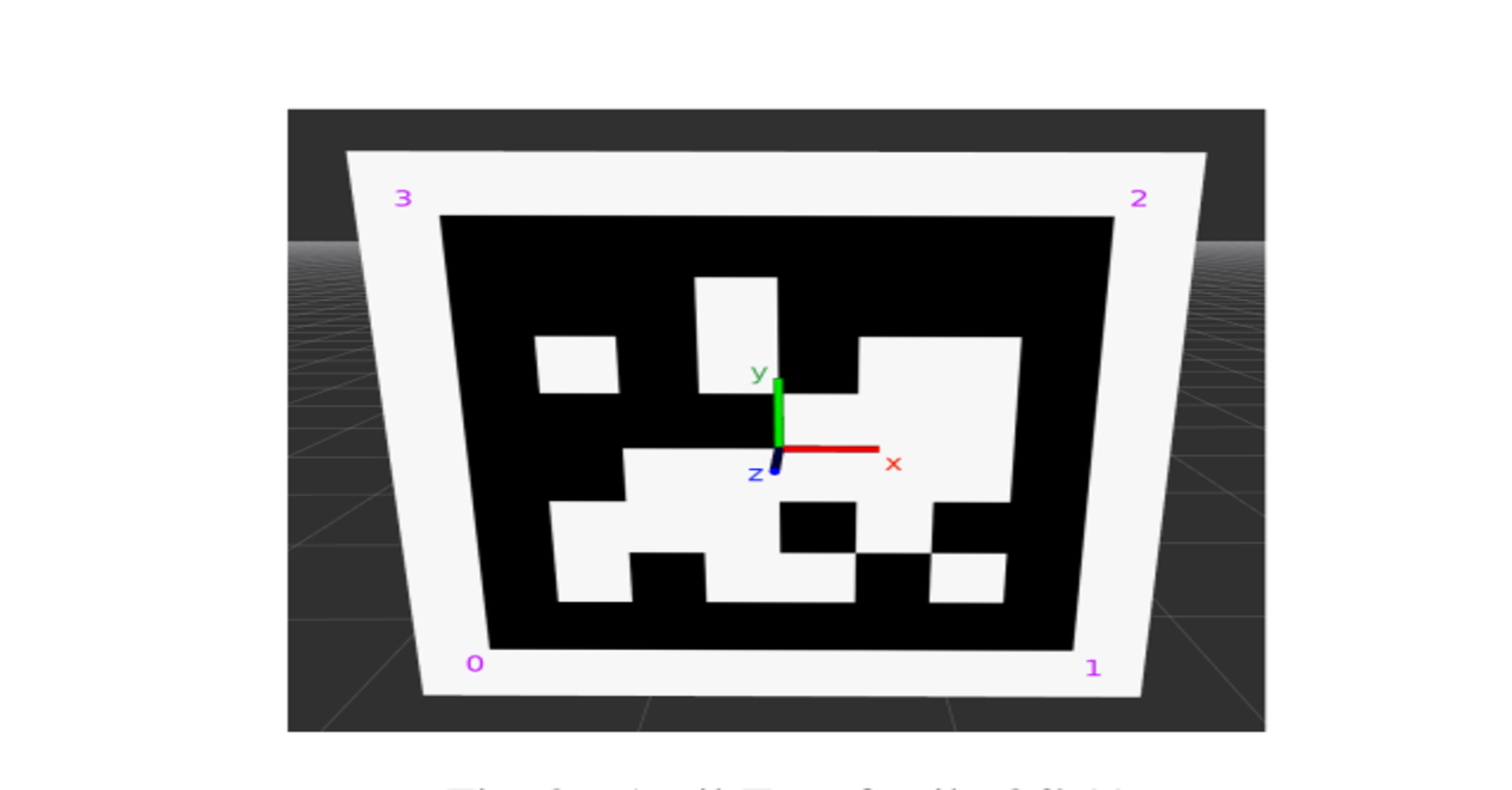

Our project focused on using the TurtleBot3 mobile robot platform for disaster response and reconnaissance. We used a range of ROS packages to build a system that included obstacle avoidance, autonomous navigation, mapping, and object detection. The system used a custom-built AprilTag detection system, which allowed the robot to identify and localize AprilTags in real-time. We overcame challenges associated with deploying mobile robots in complex environments by using ROS technology to ensure seamless communication and control. The system's TCP/IP protocol provided reliable and efficient data transmission between the TurtleBot3 and a master PC. Overall, our project demonstrated the potential of mobile robots for disaster response and showcased the effectiveness of using TurtleBot3 and ROS in these scenarios. Our contributions included designing and implementing the system, addressing technical challenges that arose during the project, and showcasing the advantages of using ROS in disaster response and reconnaissance.

Project Report / Code / Demo Video / Slides

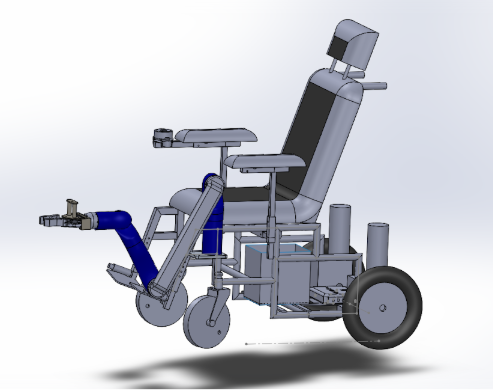

This project involves the development of an autonomous wheelchair with an integrated voice and robotic arm. The system aims to perform Human-Robot-Interaction and Cooperation, Navigation and Mapping in dynamic environments, Opening a Door, and Exchanging objects with a Companion. To achieve this, the team developed their own library for navigation and studied the wheelchair dynamics combined with a robotic arm. The system is validated using hardware and can be controlled either through speech commands or a mobile app. The team used ROS, Gmapping, Navigation Stack, and AMCL for navigation, a custom planner named "HuT Planner" for path planning, and a Markov model for speech recognition. They also created an app for speech recognition and utilized kinova software for the robotic arm. The project can find applications in various settings, including airports, households, and shopping malls, to assist physically disabled and elderly individuals in their daily activities.

Thesis / Code / More Info

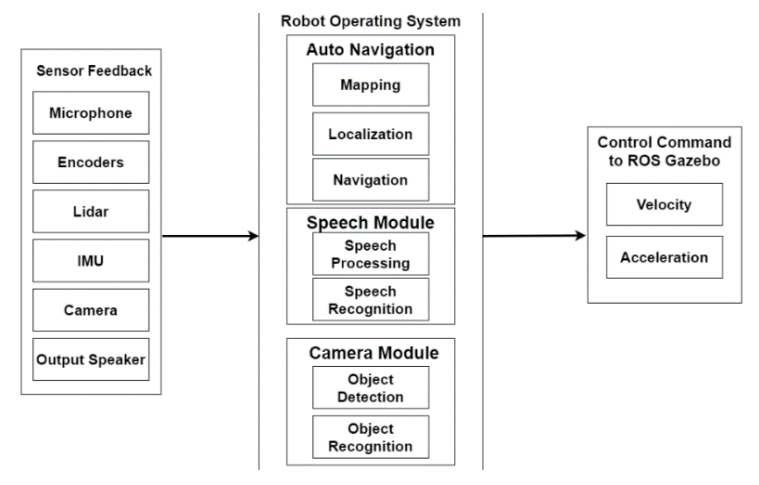

CHETAK is a self-governing home assistance robot designed to support individuals with disabilities and impairments with their daily life activities. It features an advanced and cost-effective infrastructure with Object Vision, Speech Recognition, Autonomous Navigation with Obstacle Avoidance, and a 6 Degree of Freedom Robotic Arm, making it a sophisticated service robot. The robot can differentiate among objects based on class and can anticipate qualities like Gender, Age, and Posture. It can receive commands from a person and perform tasks autonomously, either through manual or autonomous navigation, choosing the shortest path while avoiding dynamic obstacles and navigating through extended waypoints. CHETAK uses the robotic arm with end-effector and vision information to pick and place objects. The whole system is implemented using Robot Operating System(ROS) on Ubuntu platform to integrate individual nodes for complex operations. CHETAK can perform Human-Robot interaction, Object Manipulation, and Gesture recognition, helping the disabled and physically handicapped people by serving drinks, fruits, etc., and visually impaired people by finding objects or people and serving them.

Code / More Info / Demo Video - 1 / Demo Video - 2

Publications

Rajesh Kannan Megalingam, Vignesh S. Naick, Manaswini Motheram, Jahnavi Yannam, Nikhil Chowdary Gutlapalli, Vinu Sivanantham

TELKOMNIKA Telecommunication, Computing, Electronics and Control, 2023

Paper /

@InProceedings{author = {Rajesh Kannan Megalingam, Vignesh S. Naick, Manaswini Motheram, Jahnavi Yannam, Nikhil Chowdary Gutlapalli, Vinu Sivanantham},

title = {Robot Operating System based Autonomous Navigation Platform with Human-Robot Interaction},

booktitle = {TELKOMNIKA Telecommunication, Computing, Electronics and Control},

year = {2023},

}

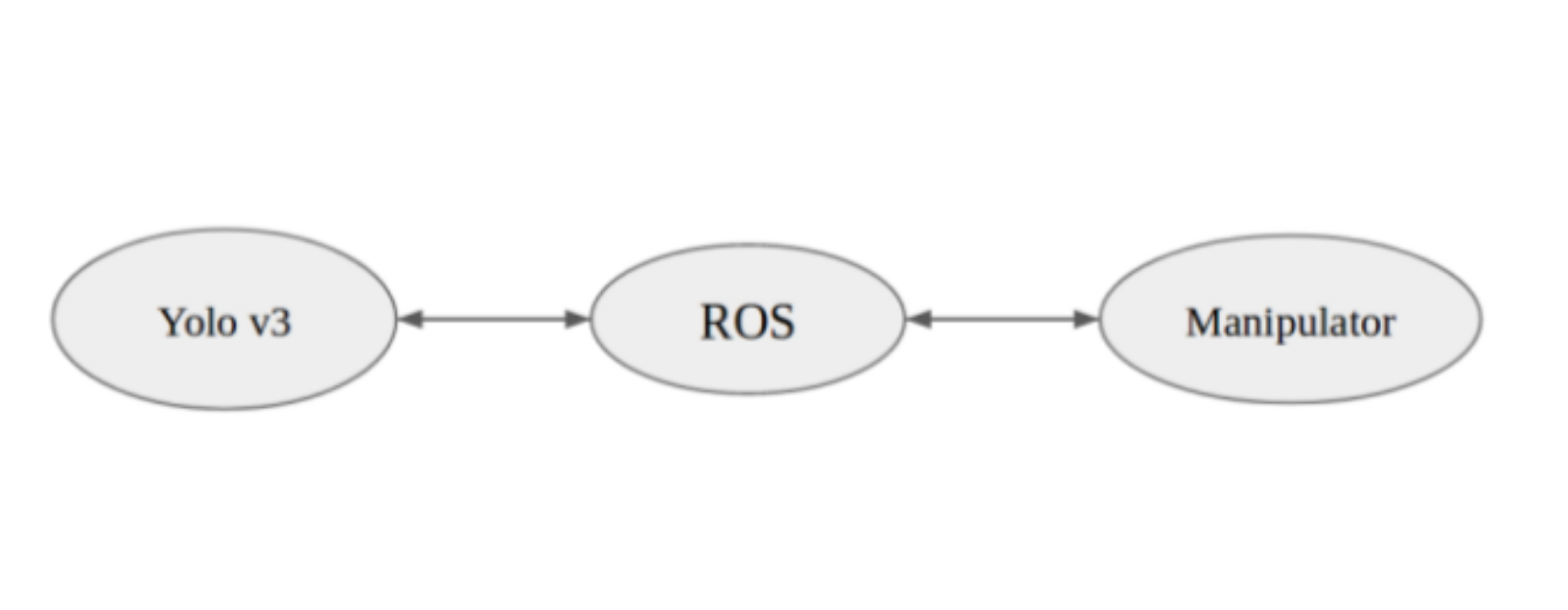

Rajesh Kannan Megalingam, Rokkam Venu Rohith Raj, Tammana Akhil, Akhil Masetti, Nikhil Chowdary Gutlapalli, Vignesh S. Naick

Second International Conference on Inventive Research in Computing Applications (ICIRCA), 2020

Paper /

@INPROCEEDINGS{9183013,author={Megalingam, Rajesh Kannan and Rohith Raj, Rokkam Venu and Akhil, Tammana and Masetti, Akhil and Chowdary, Gutlapalli Nikhil and Naick, Vignesh S},

booktitle={2020 Second International Conference on Inventive Research in Computing Applications (ICIRCA)},

title={Integration of Vision based Robot Manipulation using ROS for Assistive Applications},

year={2020},

pages={163-169},

doi={10.1109/ICIRCA48905.2020.9183013}}

}

Rajesh Kannan Megalingam, Manaswini Motheram, Jahnavi Yannam, Vignesh S. Naick, Nikhil Chowdary Gutlapalli

Fourth International Conference on Inventive Systems and Control (ICISC), 2020

Paper / Slides /

@INPROCEEDINGS{9171065, author={Megalingam, Rajesh Kannan and Manaswini, Motheram and Yannam, Jahnavi and Naick, Vignesh S and Chowdary, Gutlapalli Nikhil},

booktitle={2020 Fourth International Conference on Inventive Systems and Control (ICISC)},

title={Human Robot Interaction on Navigation platform using Robot Operating System},

year={2020},

pages={898-905},

doi={10.1109/ICISC47916.2020.9171065}}

}

Rajesh Kannan Megalingam, Rokkam Venu Rohith Raj, Akhil Masetti, Tammana Akhil, Nikhil Chowdary Gutlapalli, Vignesh S. Naick

4th International Conference on Computing Communication and Automation (ICCCA), 2018

Paper /

@INPROCEEDINGS{8777578, author={Megalingam, Rajesh Kannan and Raj, Rokkam Venu Rohith and Masetti, Akhil and Akhil, Tammana and Chowdary, Gutlapalli Nikhil and Naick, Vignesh S},

booktitle={2018 4th International Conference on Computing Communication and Automation (ICCCA)},

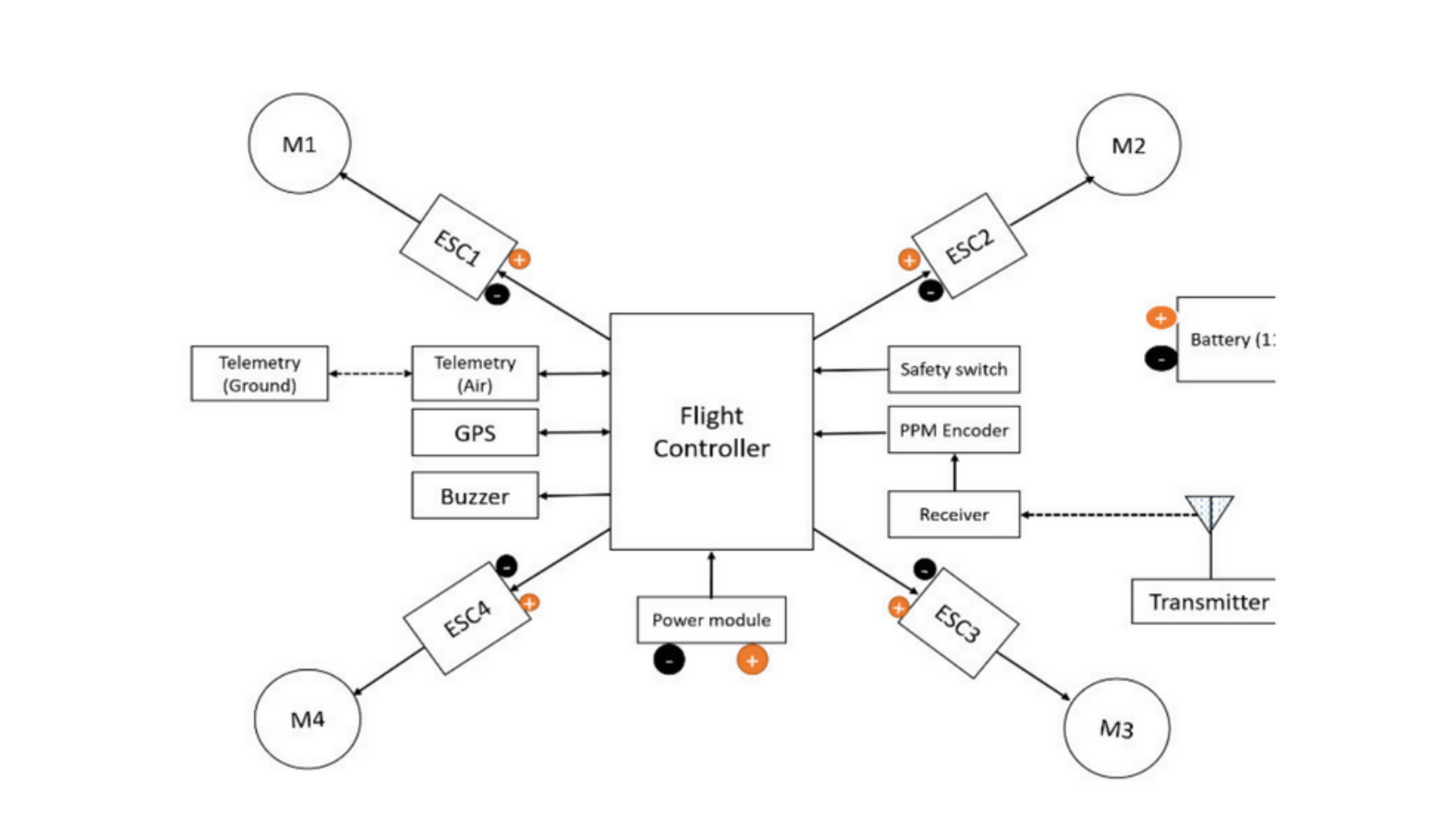

title={Design and Implementation of an Arena for Testing and Evaluating Quadcopter},

year={2018},

pages={1-7},

doi={10.1109/CCAA.2018.8777578}}

}Competitions

-

DATATHON - Community Code Competition - Boston 2023 🇺🇸

Participated in the Kaggle Community Code Competition, a data science competition that was organized by Northeastern University to challeng participants to develop algorithms for predicting whether a photograph is likely to be popular and generate a significant number of downloads.identifying and tracking cells in microscope images.

Ranked in top 30% of participants

-

Indy Autonomous Challenge - Indianapolis 2020 🇺🇸

Organized by Energy Systems Network, IAC university teams from around the world compete in a series of challenges to advance technology that can speed the commercialization of fully autonomous vehicles and deployments of advanced driver-assistance systems (ADAS) to increase safety and performance.

Qualified for the 2nd Round.

-

World Robot Summit - Japan 2020 🇯🇵

The World Robot Summit (WRS) is Challenge and Expo of its kind to bring together Robot Excellence from around the world. Out Of 119 professional teams that applied from all over the world, We are the only team from India to qualify for the finals and took part in the "Future Convenience Store Challenge".

Won 1 million JPY Cash Price.

-

RoboCup@Home - Germany 2019 🇩🇪

The RoboCup@Home league aims to develop service and assistive robot technology with high relevance for future personal domestic applications. It is the largest international annual competition for autonomous service robots. A set of benchmark tests is used to evaluate the robots’ abilities and performance in a realistic non-standardized home environment setting.

Qualified for the 2nd Round.

Awards

-

Aspiring Minds Motivational Award 2020 - Dept of ECE, Amrita University.

-

Outstanding Student Reseacher Award 2019 - HuT Labs.

-

Certificate of Excellence 2019 - 2020 by IEEE Student Branch.